Parallelizing Multiple Grid Cells Locally#

As users grow more familiar with MUSICA, they may wish to run simulations with a larger number of grid cells. By default, MUSICA executes on a single CPU core. This tutorial demonstrates how to use Dask to parallelize multiple grid cell simulations across multiple CPU cores. The solving of multiple grid cell simulations across multiple CPUs. It builds on the 1.multiple_grid_cells tutorial. Note that this tutorial serves as a proof-of-concept for local Dask usage as MUSICA is already efficient at solving a low number of grid cells without parallelization.

1. Importing Libraries#

In addition to libraries previously used throughout MUSICA tutorials, this tutorial uses Dask. Note that if you’d like to visualize Dask graphs, you will also need Graphviz installed.

[2]:

import musica

import musica.mechanism_configuration as mc

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import dask

from dask import delayed, compute

from dask.distributed import Client, LocalCluster

from scipy.stats import qmc

import time

pd.set_option('display.float_format', str) # This is done to make the arrays more readable

np.set_printoptions(suppress=True) # This is done to make the arrays more readable

2. Creating a local cluster#

To run MUSICA in parallel locally, first create a Dask LocalCluster and connect a Client. You can adjust n_workers and threads_per_worker depending on your system and workload. Here we use 2 workers because we intend to simulate 2 grid cells in parallel—one per worker. Use the Dashboard link that prints below to visually track your performance.

[3]:

cluster = LocalCluster(

n_workers=2,

threads_per_worker=1

)

client=Client(cluster)

client

[3]:

Client

Client-a051be18-56a3-11f0-8c1e-6e865522305f

| Connection method: Cluster object | Cluster type: distributed.LocalCluster |

| Dashboard: http://127.0.0.1:8787/status |

Cluster Info

LocalCluster

af541be6

| Dashboard: http://127.0.0.1:8787/status | Workers: 2 |

| Total threads: 2 | Total memory: 32.00 GiB |

| Status: running | Using processes: True |

Scheduler Info

Scheduler

Scheduler-9afc8fcd-5807-4865-b429-7c8596674bc0

| Comm: tcp://127.0.0.1:52483 | Workers: 0 |

| Dashboard: http://127.0.0.1:8787/status | Total threads: 0 |

| Started: Just now | Total memory: 0 B |

Workers

Worker: 0

| Comm: tcp://127.0.0.1:52490 | Total threads: 1 |

| Dashboard: http://127.0.0.1:52493/status | Memory: 16.00 GiB |

| Nanny: tcp://127.0.0.1:52486 | |

| Local directory: /var/folders/3_/65138rls3r5c93lzl9dhrb980000gp/T/dask-scratch-space/worker-gkx0pv41 | |

Worker: 1

| Comm: tcp://127.0.0.1:52491 | Total threads: 1 |

| Dashboard: http://127.0.0.1:52492/status | Memory: 16.00 GiB |

| Nanny: tcp://127.0.0.1:52488 | |

| Local directory: /var/folders/3_/65138rls3r5c93lzl9dhrb980000gp/T/dask-scratch-space/worker-tcn0y8e9 | |

3. Setting up Grid Cells#

Due to the nature of Dask’s lazy evaluation model and its requirement that all objects passed between workers must be picklable, this tutorial follows a slightly different order than 1.multiple_grid_cells.ipynb. In particular, MUSICA components such as the solver, state, and chemical mechanism must be defined within a dask.delayed function defined later in the notebook.By instantiating them inside the delayed function, we ensure that each Dask worker constructs and uses its own local version, avoiding pickling issues and enabling clean, parallel execution.

[4]:

num_grid_cells = 2

To prepare inputs for multiple grid cells, we create a NumPy array with repeated values for temperature, pressure, and species concentrations. Each row represents a distinct grid cell, and the columns represent the physical and chemical variables.

This array is then split into separate variables to initialize the solver’s state. Concentrations are formatted as a dictionary, as required by set_concentrations(), and additional simulation metadata (e.g., time step, simulation length) is initialized. These steps closely follow the logic in steps 5 and 6 of the Multiple Grid Cells Tutorial.

[5]:

box_model_values = [[300, 101253.3, 5, 5, 5]]

box_model_values = np.repeat(box_model_values, num_grid_cells, axis = 0)

display(box_model_values)

array([[ 300. , 101253.3, 5. , 5. , 5. ],

[ 300. , 101253.3, 5. , 5. , 5. ]])

[6]:

temperatures = box_model_values[:, 0]

pressures = box_model_values[:, 1]

concentrations = {

"A": [],

"B": [],

"C": []

}

concentrations["A"] = box_model_values[:, 2]

concentrations["B"] = box_model_values[:, 3]

concentrations["C"] = box_model_values[:, 4]

concentrations_solved = []

time_step_length = 1

sim_length = 60

curr_time = 0

4. Creating a delayed function for Dask#

We define a @delayed function that sets up the Species, Reactions, Mechanism, and Solver for a single grid cell. The function returns the concentrations at each time step. This function also mirrors steps from the Multiple Grid Cells Tutorial, but is structured for Dask compatibility.

[ ]:

@delayed

def solve_one_cell(cell_index,temperatures,pressures,concentrations, sim_length, time_step):

# Define the system

A = mc.Species(name="A") # Create each of the species with their respective names

B = mc.Species(name="B")

C = mc.Species(name="C")

species = [A, B, C] # Bundle the species into a list

gas = mc.Phase(name="gas", species=species) # Create a gas phase object containing the species

r1 = mc.Arrhenius( # Create the reactions with their name, constants, reactants, products, and phase

name="A_to_B",

A=4.0e-3, # Pre-exponential factor

C=50, # Activation energy (units assumed to be K)

reactants=[A],

products=[B],

gas_phase=gas

)

r2 = mc.Arrhenius(

name="B_to_C",

A=4.0e-3,

C=50,

reactants=[B],

products=[C],

gas_phase=gas

)

mechanism = mc.Mechanism( # Define the mechanism which contains a name, the species, the phases, and reactions

name="musica_micm_example",

species=species,

phases=[gas],

reactions=[r1, r2]

)

#create the solver

solver = musica.MICM(mechanism=mechanism, solver_type=musica.SolverType.rosenbrock_standard_order)

#create the state

state = solver.create_state(1)

state.set_conditions(temperatures[cell_index],pressures[cell_index])

cur_concentrations = {key: value[cell_index] for key, value in concentrations.items()}

state.set_concentrations(cur_concentrations)

time = 0.0

result = []

track_time = []

while time <= sim_length:

solver.solve(state, time)

result.append(state.get_concentrations().copy())

track_time.append(time)

time += time_step

return {

"times": np.array(track_time),

"concentrations": np.stack(result)

}

5. Solving#

We use a Python list comprehension to create one delayed task per grid cell. Each call to solve_one_cell(…) returns a Dask Delayed object, which represents a unit of work that hasn’t been executed yet. These tasks are stored in the tasks list and will be executed in parallel when we call compute().

[34]:

# Dispatch one task per grid cell

tasks = [solve_one_cell(i,temperatures,pressures,concentrations, sim_length, time_step_length) for i in range(num_grid_cells)]

# visualize the Dask graph

results = compute(*tasks)

dask.visualize(*tasks)

[34]:

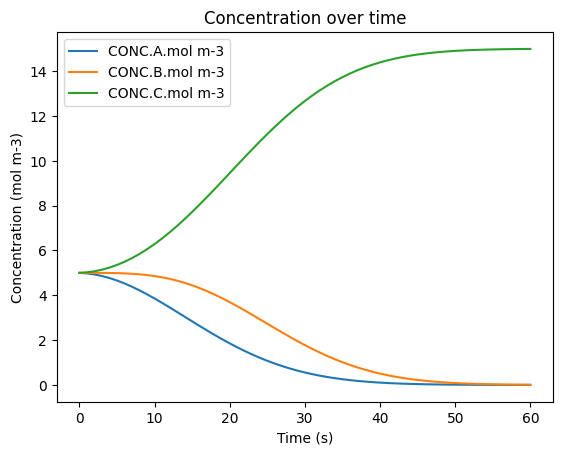

6. Visualizing Results#

As in the Multiple Grid Cells Tutorial, the results can now be visualized over time and are consistent with those obtained without using Dask.

[ ]:

# Extract the array from the tuple

time_array = results[0]['times']

data_array = results[0]['concentrations']

# Flatten the single-element lists in each dictionary

flat_dicts = [{k: v[0] for k, v in d.items()} for d in data_array]

# Convert to DataFrame

df = pd.DataFrame(flat_dicts)

df['time.s'] = data_array = results[0]['times']

#rename columns

df.rename(columns={'A': 'CONC.A.mol m-3','B':'CONC.B.mol m-3','C':'CONC.C.mol m-3'}, inplace=True)

# Show the result

# Show all rows

pd.set_option('display.max_rows', None)

display(df)

#plot

df.plot(x='time.s', y=['CONC.A.mol m-3', 'CONC.B.mol m-3', 'CONC.C.mol m-3'], title='Concentration over time', ylabel='Concentration (mol m-3)', xlabel='Time (s)')

plt.show()

| CONC.A.mol m-3 | CONC.B.mol m-3 | CONC.C.mol m-3 | time.s | |

|---|---|---|---|---|

| 0 | 5.0 | 5.0 | 5.0 | 0.0 |

| 1 | 4.976428528398802 | 4.999944351093875 | 5.0236271205073235 | 1.0 |

| 2 | 4.929618429433882 | 4.999502304184354 | 5.070879266381759 | 2.0 |

| 3 | 4.860227571898497 | 4.998027906909858 | 5.141744521191641 | 3.0 |

| 4 | 4.769223461910271 | 4.994590343685047 | 5.236186194404681 | 4.0 |

| 5 | 4.657860795721631 | 4.988017567302154 | 5.3541216369762115 | 5.0 |

| 6 | 4.527652679026353 | 4.9769510981867855 | 5.49539622278686 | 6.0 |

| 7 | 4.380336435836852 | 4.959909340283517 | 5.65975422387963 | 7.0 |

| 8 | 4.217835086228851 | 4.935356388404806 | 5.846808525366342 | 8.0 |

| 9 | 4.042215685186966 | 4.901773090409533 | 6.056011224403497 | 9.0 |

| 10 | 3.855645780447624 | 4.857727088113415 | 6.28662713143896 | 10.0 |

| 11 | 3.6603492643036737 | 4.801938691142486 | 6.537712044553839 | 11.0 |

| 12 | 3.458562863787737 | 4.733339725758781 | 6.808097410453479 | 12.0 |

| 13 | 3.252494610512199 | 4.651122489164782 | 7.096382900323015 | 13.0 |

| 14 | 3.0442846925682847 | 4.554778598766272 | 7.400936708665442 | 14.0 |

| 15 | 2.8359704984770615 | 4.444124054435992 | 7.719905447086947 | 15.0 |

| 16 | 2.6294560693405855 | 4.3193112630886885 | 8.051232667570726 | 16.0 |

| 17 | 2.426486586564641 | 4.180827795709497 | 8.392685617725858 | 17.0 |

| 18 | 2.228628262140719 | 4.029482439264608 | 8.741889298594673 | 18.0 |

| 19 | 2.0372537853108215 | 3.866379699486838 | 9.096366515202343 | 19.0 |

| 20 | 1.8535332921332346 | 3.6928843685085195 | 9.453582339358244 | 20.0 |

| 21 | 1.6784306527893238 | 3.5105781208300084 | 9.810991226380661 | 21.0 |

| 22 | 1.5127047211405233 | 3.321210331143579 | 10.166084947715891 | 22.0 |

| 23 | 1.356915066356869 | 3.1266454146790843 | 10.51643951896404 | 23.0 |

| 24 | 1.2114316101722387 | 2.9288089788390224 | 10.859759410988733 | 24.0 |

| 25 | 1.0764475267344704 | 2.729634954205287 | 11.19391751906024 | 25.0 |

| 26 | 0.9519947249254646 | 2.5310156590366075 | 11.51698961603792 | 26.0 |

| 27 | 0.837961223947276 | 2.3347564634880977 | 11.82728231256462 | 27.0 |

| 28 | 0.7341097493324982 | 2.1425363796482437 | 12.123353871019258 | 28.0 |

| 29 | 0.6400969149160579 | 1.9558755335759015 | 12.40402755150804 | 29.0 |

| 30 | 0.5554924126827311 | 1.7761100976759248 | 12.668397489641347 | 30.0 |

| 31 | 0.4797977024313251 | 1.6043748959616275 | 12.915827401607048 | 31.0 |

| 32 | 0.41246377244198124 | 1.4415935581573638 | 13.145942669400656 | 32.0 |

| 33 | 0.35290762650931823 | 1.2884758048037372 | 13.358616568686944 | 33.0 |

| 34 | 0.30052723785431357 | 1.1455212042292484 | 13.55395155791644 | 34.0 |

| 35 | 0.2547147930736237 | 1.0130285591192634 | 13.732256647807118 | 35.0 |

| 36 | 0.21486812652973247 | 0.8911099572756886 | 13.894021916194585 | 36.0 |

| 37 | 0.18040031517217622 | 0.7797084563927418 | 14.039891228435092 | 37.0 |

| 38 | 0.15074746410949966 | 0.678618361746843 | 14.170634174143665 | 38.0 |

| 39 | 0.12537476336137934 | 0.5875070918377543 | 14.287118144800878 | 39.0 |

| 40 | 0.1037809357360195 | 0.5059377019084463 | 14.390281362355545 | 40.0 |

| 41 | 0.08550122484003099 | 0.4333912397547042 | 14.481107535405275 | 41.0 |

| 42 | 0.07010909140420013 | 0.36928823295778984 | 14.560602675638018 | 42.0 |

| 43 | 0.0572167962977099 | 0.3130087426444584 | 14.629774461057835 | 43.0 |

| 44 | 0.04647505093834706 | 0.2639105579237306 | 14.689614391137928 | 44.0 |

| 45 | 0.037571911560932474 | 0.2213452402398352 | 14.741082848199234 | 45.0 |

| 46 | 0.030231084311409015 | 0.18467185233856923 | 14.785097063350019 | 46.0 |

| 47 | 0.024209794704965863 | 0.15326831813561934 | 14.822521887159413 | 47.0 |

| 48 | 0.01929635886444594 | 0.12654045469718583 | 14.854163186438367 | 48.0 |

| 49 | 0.015307576263053525 | 0.10392879432885158 | 14.880763629408094 | 49.0 |

| 50 | 0.012086045407999296 | 0.08491337312980626 | 14.903000581462189 | 50.0 |

| 51 | 0.00949748582941424 | 0.06901670299746232 | 14.921485811173119 | 51.0 |

| 52 | 0.007428132522030055 | 0.05580516841273506 | 14.936766699065231 | 52.0 |

| 53 | 0.005782253100782559 | 0.0448890993856103 | 14.949328647513605 | 53.0 |

| 54 | 0.00447982369715577 | 0.03592177000563525 | 14.959598406297205 | 54.0 |

| 55 | 0.003454387225865738 | 0.028597560570059688 | 14.96794805220407 | 55.0 |

| 56 | 0.002651107160467822 | 0.022649502677897917 | 14.974699390161629 | 56.0 |

| 57 | 0.0020250213465128905 | 0.017846403257376808 | 14.980128575396103 | 57.0 |

| 58 | 0.0015394935543717359 | 0.013989717274570492 | 14.984470789171048 | 58.0 |

| 59 | 0.0011648552812954611 | 0.010910311593626494 | 14.987924833125069 | 59.0 |

| 60 | 0.000877226570820777 | 0.008465235540415051 | 14.990657537888755 | 60.0 |