Description¶

- This is a very simple example that demonstrates how to download the same zarr object from AWS using s3 and OSDF protocols, respectively

- This notebook does not use intake catalogs to get the data or dask to scale up the job

from matplotlib import pyplot as plt

import xarray as xr

import fsspec

import pandas as pd

import s3fsimport fsspec.implementations.http as fshttp

from pelicanfs.core import OSDFFileSystem,PelicanMap # Connect to AWS S3 storage

fs = s3fs.S3FileSystem(anon=True)

# create a MutableMapping from a store URL

mapper = fs.get_mapper("s3://cmip6-pds/CMIP6/CMIP/AS-RCEC/TaiESM1/1pctCO2/r1i1p1f1/Amon/tas/gn/v20200225/")

# make sure to specify that metadata is consolidated

ds_s3 = xr.open_zarr(mapper, consolidated=True)

ds_s3Loading...

%%time

ds_osdf = xr.open_zarr('osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/AS-RCEC/TaiESM1/1pctCO2/r1i1p1f1/Amon/tas/gn/v20200225/')

ds_osdfLoading...

# test = xr.open_zarr('s3://cmip6-pds/CMIP6/HighResMIP/CMCC/CMCC-CM2-HR4/highresSST-present/r1i1p1f1/Amon/ta/gn/v20170706/,,20170706',anon=True)%%time

surface_air_temps = ds_osdf['tas']

surface_air_tempsLoading...

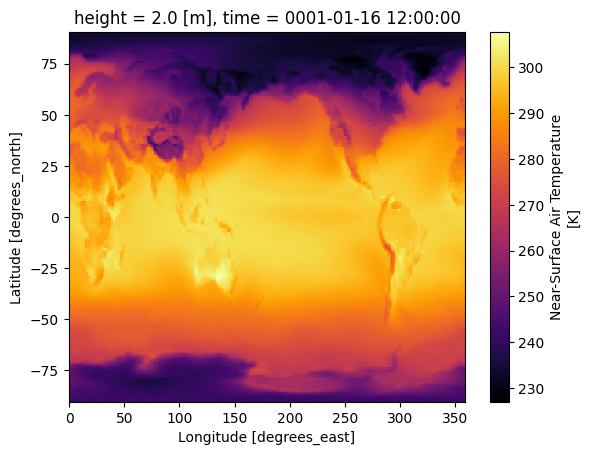

Plot the first time step¶

%%time

surface_air_temps.isel(time=0).plot(cmap='inferno')CPU times: user 784 ms, sys: 369 ms, total: 1.15 s

Wall time: 2.4 s

df = pd.read_csv("https://cmip6-pds.s3.amazonaws.com/pangeo-cmip6.csv")

df_subset = df.query("activity_id=='CMIP' & table_id=='Amon' & variable_id=='tas'")

#

# Replace 'tas' with 'tasmax' or 'tasmin' if you need `new' data that has not already been loaded to a cache

df_subsetLoading...

Download multiple files¶

Change ztore/object paths to use osdf protocol!

object_paths = df_subset['zstore'].str.replace('s3://','osdf:///aws-opendata/us-west-2/',regex=False).to_list()

object_paths[:5]['osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/CNRM-CERFACS/CNRM-CM6-1/1pctCO2/r1i1p1f2/Amon/tas/gr/v20180626/',

'osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/NOAA-GFDL/GFDL-CM4/1pctCO2/r1i1p1f1/Amon/tas/gr1/v20180701/',

'osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/NOAA-GFDL/GFDL-CM4/abrupt-4xCO2/r1i1p1f1/Amon/tas/gr1/v20180701/',

'osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/NOAA-GFDL/GFDL-CM4/piControl/r1i1p1f1/Amon/tas/gr1/v20180701/',

'osdf:///aws-opendata/us-west-2/cmip6-pds/CMIP6/CMIP/NOAA-GFDL/GFDL-ESM4/1pctCO2/r1i1p1f1/Amon/tas/gr1/v20180701/']len(object_paths)1160# Max number of objects to downloaded

n_max = 2%%time

for i in range(n_max):

ds = xr.open_zarr(object_paths[i])

#

tas = ds['tas']

#Explicitly load tas/ (Temperature, Air Surface) data

tas_copy = tas

tas_copy.compute()

print(f' Loaded data from {i}_th zarr store') Loaded data from 0_th zarr store

Loaded data from 1_th zarr store

CPU times: user 7.76 s, sys: 3.24 s, total: 11 s

Wall time: 31.7 s