ACS instance profiling

ACS isn’t devoid of bugs, nor is it the most efficient process. That isn’t to say that it has to be 100% efficient to be successful, but it would be nice to have to use only resources it absolutely needs and little else. Go provides for some useful introspective tooling to perform some useful profiling.

HTTP endpoints

ACS has been configured to allow for completely open access to the read-only route data

rooted at

debug/stats

The debug/stats route emits some potentially useful data about the HTTP traffic. You should get something like below.

{

"pid":17181,

"uptime":"18m37.244701274s",

"uptime_sec":1117.244701274,

"time":"2018-06-25 09:03:20.60792218 -0600 MDT m=+1121.465433394",

"unixtime":1529939000,

"status_code_count":{

"401":1

},

"total_status_code_count":{

"200":228,

"401":1118,

"404":6

},

"count":1,

"total_count":1352,

"total_response_time":"2m0.726762259s",

"total_response_time_sec":120.726762259,

"average_response_time":"89.294942ms",

"average_response_time_sec":0.089294942

}

debug/pprof/

This contains the standard Go profiler support routes. There are multiple tools that can hook into this and create profile dumps that can be analyzed at a later time, or realtime. This document is not sufficient for explainging all the useability, and is meant to be a guide.

To be of any real use, the following setting in ACS’s general.yaml must be set to something on the order of 90s, otherwise longer profiling measurement periods cannot be attempted.:

Network:

# Setting this to a low period, might prematurely close connections

# Too high and you risk allowing too many connection to remain open

# 90-180s might be 'just right'

WriteTimeout: 90s

Web

As a first pass, you can simply browse to http://acs:port/debug/pprof/ (note the dangling /) and be presented with a really barren, but useful, webpage,

pprof

This is the standard tool provided with Go. You should really consult pprof’s pkg docs, and the Profiling Go Programs Blog Post. General usage is via

go tool pprof http://acs:port/debug/pprof/profile

or similar for the /profile, /heap, /goroutine, /block, /mutex, and /threadcreate subroutes.

pprof can create some useful diagrams assuming you have graphviz:

(brew|apt|dep|pkg) install gperftools graphiviz

pprof –web localhost:6060/debug

go-torch

go-torch is a tool to generate torch diagrams to aid in application profiling. You first need to install the tool via:

go get github.com/uber/go-torch #install go-torch

cd $GOPATH/src/github.com/uber/go-torch

git clone https://github.com/brendangregg/FlameGraph.git #fetch FlameGraph deps.

When that is done, you should be able to directly connect to a running acs instance and create svg plots via:

go-torch -u http://avaps-dev.eol.ucar.edu:8080/debug/pprof/ --file="torch.svg"

Charts

ACS makes use of the github.com/mkevac/debugcharts package, so when connected

to the internet (eg, ground testing), you should be able to access

https://acs:port/debug/charts/ for some per-second

updated charts.

Memory Leak Debugging

The best way to learn is usually an example. Over the time frame of roughly July 3rd to July 12th, I noticed that the ACS instance was gobbling up large amounts of memmory. just idling. Which is bad. Most of the commits in that range are various steps of locating the problems and attempting to fix it. The problem was first noticed by looking at the charts route which has some pretty useful time series charts that showed the heap growing indefintely.

Time to roll up the sleeves. First I cobbled together a script that automated gathering route data from analysis later - the key part is below:

function sampleize() {

go tool pprof -proto "$URL/debug/pprof/$1" 2> $2.log > $2.pb.gz

go tool pprof -top $4 $2.pb.gz >> $2.log

go-torch --title "$3" -f $2.svg $2.pb.gz &> /dev/null

}

sampleize "heap" "heap" "Heap" "-sample_index=inuse_space"

What this does is grab the heap data from the /debug/pprof/heap route and dumps it to

a gzipped protocol buffer file. From there, we can use the go tool pprof tooling to analyze the snapshot, and start to get a useful idea of what is going on. Ok, so now we have a snapshot, what can we do with it? Essentially, where’s the (memory leak) beef?

For our usage, I have taken 3 snapshots: one taken just after startup, and the next two are taken about 10 mins after each other. The first thing to do is check is what is happening just after start up:

λ go tool pprof 1c095e0818f595cf7e6f065334cda1aadfbc09ec/heap.pb.gz

File: acs-daemon

Build ID: 9f52a8408ff14ff129c7b44bfd637d7f350a1139

Type: inuse_space

Time: Jul 16, 2018 at 2:05pm (MDT)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top 20

Showing nodes accounting for 48101.46kB, 100% of 48101.46kB total

Showing top 20 nodes out of 151

flat flat% sum% cum cum%

18963.79kB 39.42% 39.42% 18963.79kB 39.42% reflect.unsafe_New

9040.26kB 18.79% 58.22% 9040.26kB 18.79% github.com/NCAR/ACS/vendor/github.com/mkevac/debugcharts.init.0

3586.06kB 7.46% 65.67% 3586.06kB 7.46% net/textproto.(*Reader).ReadMIMEHeader

2626.67kB 5.46% 71.13% 2626.67kB 5.46% regexp.(*bitState).reset

2048.69kB 4.26% 75.39% 5120.98kB 10.65% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.(*Registry).Get

2048.25kB 4.26% 79.65% 2048.25kB 4.26% database/sql.cloneBytes (inline)

1536.31kB 3.19% 82.85% 1536.31kB 3.19% net/http.(*Request).WithContext

1536.05kB 3.19% 86.04% 4096.31kB 8.52% database/sql.convertAssign

1024.05kB 2.13% 88.17% 1024.05kB 2.13% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.NewSession (inline)

561.50kB 1.17% 89.34% 561.50kB 1.17% html.init

520.04kB 1.08% 90.42% 520.04kB 1.08% regexp.(*bitState).push

512.88kB 1.07% 91.48% 512.88kB 1.07% sync.(*Map).LoadOrStore

512.38kB 1.07% 92.55% 512.38kB 1.07% regexp.onePassCopy

512.14kB 1.06% 93.61% 512.14kB 1.06% github.com/NCAR/ACS/vendor/github.com/gorilla/context.Set

512.14kB 1.06% 94.68% 512.14kB 1.06% github.com/NCAR/ACS/vendor/github.com/jinzhu/gorm.parseTagSetting

512.14kB 1.06% 95.74% 512.14kB 1.06% reflect.mapassign

512.05kB 1.06% 96.81% 512.05kB 1.06% context.(*cancelCtx).Done

512.05kB 1.06% 97.87% 512.05kB 1.06% net/http.(*persistConn).roundTrip

512.02kB 1.06% 98.94% 512.02kB 1.06% encoding/gob.decString

512.01kB 1.06% 100% 512.01kB 1.06% database/sql.asString

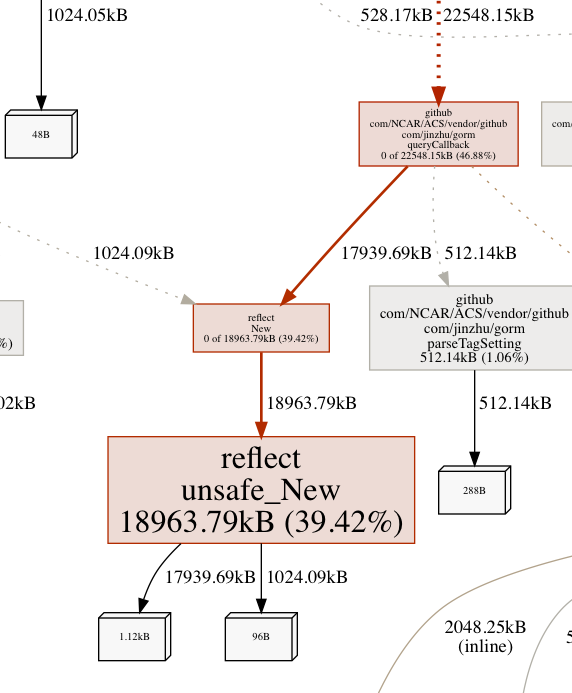

Nothing too suprising here. Using ~48MB ram. reflect.unsafe_New is dominating. What is calling reflect.unsafe_New so often? We can use (pprof) web or (pprof) png to get an edge weighted call directed call graph.

Neato! Well, reflect.unsafe_New belongs strictly to gorm - the database library. Ok. So that makes sense. gorm uses reflect to fetch struct tags and whatnot to store and retrieve data from the database - that is the whole point of the package after all. Looking back over the graph and output of top, it’s not clear at start what is leaking memory - but that isn’t exactly surprising: leaks happen over time. Let’s give it some time. From previous experience, the leak becomes more obvious after ~20 minutes.

Take another snapshot. Rinse and repeat. Something is slightly different:

λ go tool pprof 1c095e0818f595cf7e6f065334cda1aadfbc09ec-3/heap.pb.gz

File: acs-daemon

Build ID: 9f52a8408ff14ff129c7b44bfd637d7f350a1139

Type: inuse_space

Time: Jul 16, 2018 at 2:34pm (MDT)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top 20

Showing nodes accounting for 72.50MB, 94.16% of 77MB total

Showing top 20 nodes out of 176

flat flat% sum% cum cum%

19.02MB 24.70% 24.70% 19.02MB 24.70% reflect.unsafe_New

14.01MB 18.19% 42.89% 14.01MB 18.19% net/textproto.(*Reader).ReadMIMEHeader

8.83MB 11.46% 54.35% 8.83MB 11.46% github.com/NCAR/ACS/vendor/github.com/mkevac/debugcharts.init.0

5.50MB 7.14% 61.50% 5.50MB 7.14% net/http.(*Request).WithContext

5MB 6.50% 67.99% 12.50MB 16.24% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.(*Registry).Get

3.08MB 4.00% 71.99% 3.08MB 4.00% regexp.(*bitState).reset

3MB 3.90% 75.89% 3MB 3.90% github.com/NCAR/ACS/vendor/github.com/gorilla/context.Set

2MB 2.60% 78.49% 2MB 2.60% reflect.mapassign

2MB 2.60% 81.09% 2MB 2.60% database/sql.cloneBytes (inline)

1.50MB 1.95% 83.04% 1.50MB 1.95% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.NewSession (inline)

1.50MB 1.95% 84.98% 1.50MB 1.95% net.sockaddrToTCP

1.50MB 1.95% 86.93% 4MB 5.19% database/sql.convertAssign

1.50MB 1.95% 88.88% 1.50MB 1.95% encoding/gob.decString

1MB 1.30% 90.18% 7.50MB 9.74% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.(*CookieStore).New

0.55MB 0.71% 90.89% 0.55MB 0.71% html.init

0.51MB 0.66% 91.55% 0.51MB 0.66% regexp.(*bitState).push

0.50MB 0.65% 92.20% 0.50MB 0.65% github.com/NCAR/ACS/structs/speca.NewFrame

0.50MB 0.65% 92.85% 0.50MB 0.65% github.com/NCAR/ACS/structs/speca.Sweep.Maximum

0.50MB 0.65% 93.51% 0.50MB 0.65% sync.(*Map).LoadOrStore

0.50MB 0.65% 94.16% 0.50MB 0.65% bytes.makeSlice

We are now using 72.5MB of RAM. Ouch. It looks like ReadMIMEHeader is growing, and components of various gorilla components are now prominent. We could spend time flipping back and forth between the outputs of sample 1 and sample 2, but it would be better to just use functionality the tool provides. There is a --base flag that tells the pprof to use one sample as the basis and then deal with only the diffs. How handy! It’s like the tool was built for doing this sort of thing.

λ go tool pprof -base heap.pb.gz ../1c095e0818f595cf7e6f065334cda1aadfbc09ec-3/heap.pb.gz

File: acs-daemon

Build ID: 9f52a8408ff14ff129c7b44bfd637d7f350a1139

Type: inuse_space

Time: Jul 16, 2018 at 2:05pm (MDT)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top 20

Showing nodes accounting for 30750.61kB, 96.78% of 31774.71kB total

Dropped 2 nodes (cum <= 158.87kB)

Showing top 20 nodes out of 103

flat flat% sum% cum cum%

10757.38kB 33.86% 33.86% 10757.38kB 33.86% net/textproto.(*Reader).ReadMIMEHeader

4096.94kB 12.89% 46.75% 4096.94kB 12.89% net/http.(*Request).WithContext

3073.03kB 9.67% 56.42% 7681.58kB 24.18% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.(*Registry).Get

2560.59kB 8.06% 64.48% 2560.59kB 8.06% github.com/NCAR/ACS/vendor/github.com/gorilla/context.Set

1536.42kB 4.84% 69.31% 1536.42kB 4.84% reflect.mapassign

1536.07kB 4.83% 74.15% 1536.07kB 4.83% net.sockaddrToTCP

1024.05kB 3.22% 77.37% 4608.55kB 14.50% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.(*CookieStore).New

1024.03kB 3.22% 80.59% 1024.03kB 3.22% encoding/gob.decString

528.17kB 1.66% 82.26% 528.17kB 1.66% regexp.(*bitState).reset

514.63kB 1.62% 83.88% 514.63kB 1.62% github.com/NCAR/ACS/structs/speca.NewFrame

514.63kB 1.62% 85.50% 514.63kB 1.62% github.com/NCAR/ACS/structs/speca.Sweep.Maximum

512.56kB 1.61% 87.11% 512.56kB 1.61% bytes.makeSlice

-512.05kB 1.61% 85.50% -512.05kB 1.61% net/http.(*persistConn).roundTrip

512.04kB 1.61% 87.11% 512.04kB 1.61% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.NewSession (inline)

512.03kB 1.61% 88.72% 512.03kB 1.61% context.WithCancel

512.02kB 1.61% 90.33% 512.02kB 1.61% context.WithValue

512.02kB 1.61% 91.94% 3072.61kB 9.67% github.com/NCAR/ACS/vendor/github.com/gorilla/sessions.GetRegistry

512.02kB 1.61% 93.55% 512.02kB 1.61% runtime.systemstack

512.02kB 1.61% 95.17% 512.02kB 1.61% net/textproto.(*Reader).ReadLine

512.01kB 1.61% 96.78% 512.01kB 1.61% reflect.unsafe_New

From top we see that acs has scarfed up another 30MBs, but a large bulk of this is taken by gorilla packages. Interesting. Given that gorilla/sessions is only directly used by one package in acs: routes/auth as part of handling session cookies. routes.auth was written pretty early on before more experience with the HTTP protocol. It uses session strictly for shoveling User data into the request, and probably isn’t the best way. These discoveries lead to reworking the auth package to remove the need to use gorilla/session. gorilla/securecookie and gorilla/context, which has been mostly superceded by the standard libraries context.

Hopefully this has given enough information on how to use pprof to aid in checking for memory leaks. As a useful note, the difference between working with heap and profile (CPU usage) snapshots is strictly the name. The tooling works the same.